Building Microservices

Kenneth Wong

2025-02-16

10 min read

Recap of notes I have taken on this book of Building Microservices

Book

Microservices

I took some notes in some chapters, skipped some so this is not a complete recap. I highly recommend this book, it talks a lot about microservices as a whole and common practices in the real world as well as their tradeoffs.

It mentions a lot about whether or not your company should adopt microservices, at times really trying to persuade readers to avoid microservices at all costs.

Chapter 4: Micro-service communication styles

Event driven communication

Asychronous near realtime communication, usually implemented with a message queue.

In an event-driven architecture, it’s generally better to include all the details you’re willing to expose in the event message itself. This avoids tight coupling, where downstream services would otherwise need to call upstream services to fetch missing information. However, this approach also comes with trade-offs: removing a field later can break downstream consumers, and it effectively broadens the responsibility and scope of the application emitting the event.

This means that we should be very careful with the fields we choose to include in the event message, as removing it is a greater headache than including it later.

Dead-letter queue

A dead letter queue is a special queue used to store messages that can’t be processed or delivered by the main queue after multiple attempts. It helps isolate problematic messages for later inspection, debugging, or retrying, so they don’t block or disrupt normal processing.

Topic message queue

A message queue that can be subscribed by multiple consumers. This is a broadcast style queue if we want a message to be received by all consumers.

Message broker

Message brokers tend to have a storage, rate limiting and stores messages if downstream consumers are unavailable. Abstracts retry logic of traditional synchronous request/response models.

Message queues tend to be in a cluster to persist messages in the case of a single machine failure.

Service Discovery

When there is more than 1 entry in the host, DNS lookup should point to the load balancer and the load balancer does the routing

Zookeeper - A service discovery tool that can be used to store the IP address of the service. It is now superseded by consul.

Consul - has own DNS server running within, text configuration file that can be dynamically updated for whenever an instance is spun up/ taken down. Also has health monitoring. Uses RESTful HTTP, easy to integrate with services

Etcd - out of the box service discovery of kubernetes. Simple, fast. Best choice if running on kubernetes environment. However, if running on a mixed environment, better to run a dedicated service discovery service like consul.

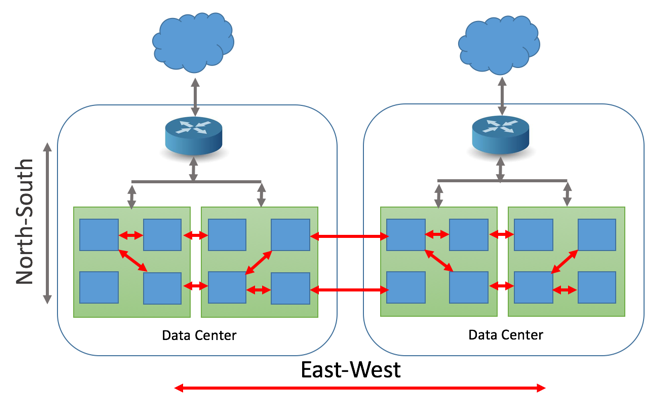

Application Gateway- sits at the perimeter of cluster and handles communication between boundaries of systems (North-South traffic)

basically an HTTP proxy with additional functionalities

Mapping external requests to corresponding microservice

Use cases: protocol rewrite, API key management, rate limiting

Service Mesh- deals with communication between microservices within the perimeter (East-West traffic)

provides consistency on certain aspects of inter-microservice communication

Reduces need to abstract the common behaviour into a shared library. Not ideal as each microservice would have to update their shared library whenever it is updated. Makes all microservices dependent on the shared library, hard to make changes.

Since east-west traffic volume is higher than north-south. We have to make sure latency is low and minimum number of calls are made. Therefore the mesh proxy actually resides in the same machine as the microservice instance. So when making an RPC call the instance thinks it’s making a network call but it’s actually communicating with the proxy residing in the same machine.

Chapter 6: Workflow

Let's Connect 🍵

You made it to the end of my blog! I hope you enjoyed reading it and got something out of it. If you are interested in connecting with me, feel free to reach out to me on LinkedIn . I'm always up for a chat/or to work on exciting projects together!

Recent Blogs View All

genAI

LLM

backend

python

Yaplabs.ai

A genAI solution to language tutoring. Enabling language learners to be able to practice their language with an AI enabled real-time tutor.

2025-03-22 | 10 min read

Read→

Book

Microservices

Building Microservices

Recap of notes I have taken on this book of Building Microservices

2025-02-16 | 10 min read

Read→